Unless you’ve been living under a rock, you’re probably aware that artificial intelligence (AI) has now gone mainstream. But while AI has entered our everyday lives, many still don’t fully understand how it works or what makes it different from other technologies. If you’re feeling behind, you’re not alone. Personally, my head is spinning trying to keep up with the accelerating pace of change.

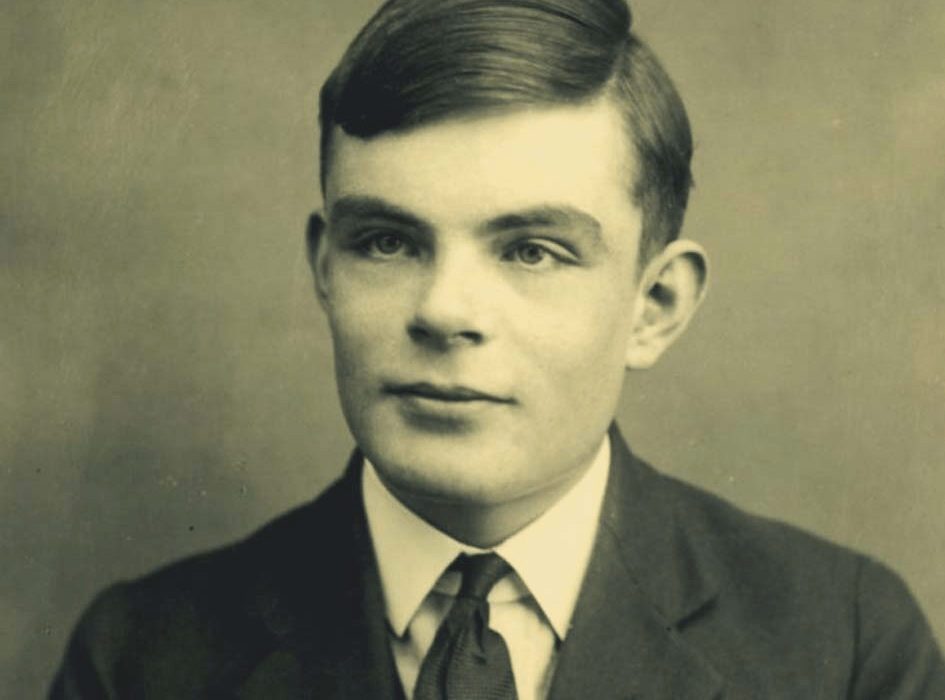

(BletchleyPark.org.uk)

AI as a concept has been around for decades. Alan Turing, the World War II codebreaker and widely recognized ‘father of AI’, laid the logical foundation in the 1940s. The test he developed still bears his name today, “The Turing Test.” In it, the goal of artificial, or machine, intelligence is to become indistinguishable from a real person. If a third party reads a text transcript between a machine and a person and can’t tell the two apart, the intelligence has passed the test.

Since then, there’s been a pattern to how we treat non-human intelligence. Once AI accomplishes a task (like beating a human at chess, Go, or recognizing speech), people tend to stop calling it AI and move on to the next challenge. That people now call AI by brand names, like ChatGPT, is another example of how it happens. Plus, “Chat Generative Pre-Trained Transformer” is a mouthful.

Models like it, collectively “transformer models”, are the latest breakthrough in AI’s evolution. These models have been used in fields like natural language processing, coding, chemistry, biology, and physics. Some scientists have even used AI models to help earn the Nobel Prize. However, training of foundational models has largely followed a brute-force approach; which is resource-intensive, power hungry, and expensive. Very expensive. OpenAI’s most recent funding round, its tenth, brought its total funding to almost $18 billion.

The company recently announced the launch of a new venture, “Stargate Project.” This new company plans to invest $500 billion over the next four years building out infrastructure to support OpenAI’s growth. When announcing the project, the company touted it would create jobs, secure American leadership in AI, and “generate massive economic benefit for the entire world.” (OpenAI)

Investing staggering sums into infrastructure projects is a hallmark of American ambition. From the railroads to the interstate highway system, and GPS satellites, these projects often symbolize progress. However, history reminds us that these undertakings are not without their risks. A closer look at the Bureau of Reclamation’s dam-building era offers a timely lesson.

In his book, “Cadillac Desert,” Marc Reisner details the Bureau’s push to bring water to the west by taming rivers with massive dams, Hoover being the most famous. Less known, however, is the fact that these projects were often rushed, expensive, and unsustainable. Many dams were built to benefit a select few irrigation farmers while leaving behind environmental and economic consequences for taxpayers.

The Bureau of Reclamation proposed Teton Dam in 1963 in response to severe drought in the years before. Despite concerns from citizens over its necessity, environmental impact, and costs, construction began in 1972. Much like the chaotic pace of AI development today, the dam would suffer from poor planning, a lack of site preparation, and a whole lot of ego. The dam was finished in November 1975, and its reservoir began to fill. The winter brought heavier than average snow, and by spring the water was filling 1,000 times faster than planned. But the show went on.

Teton Dam sprung a leak on June 3, 1975. It collapsed two days later, flooding towns downstream and killing eleven.

Like the Bureau’s approach to dam building, many advancements to foundational models have come from putting capital to work through increased computing power or ever-expanding data sets. The process works, but it leads to systems that are expensive, inefficient, and environmentally costly. Like the Teton Dam, which cost millions but ultimately collapsed, these AI models risk leaving behind devastation, both economic and actual.

OpenAI may have the lead in AI development, but the company’s foundation is questionable. A new competitor, China-based DeepSeek, may have exposed a leak.

DeepSeek burst on the AI scene in recent weeks, announcing itself as a formidable competitor to not only OpenAI, but businesses based on foundational models. Unlike Western models that have relied heavily on immense computing power, DeepSeek’s approach, which used less powerful NVIDIA chips, is both novel in its logic and more energy efficient.

In a shocking move, the company released its model weights to the public. Known as open source, this enables researchers and developers worldwide to explore, adapt, and refine its architecture. This transparency contrasts sharply with the proprietary nature of OpenAI’s models.

without the use of external toolkits and voting techniques. (arxiv.org)

DeepSeek also introduced a new and interesting approach to training a foundational model, achieving high performance with significantly lower claimed computational and energy usage. Instead of activating all neurons simultaneously, the model selectively engages only the necessary subsets, dramatically reducing computational waste. This method is intuitive and mirrors how human brains function, efficiently focusing the body’s resources where they’re most needed. It’s like having a liberal arts background and being able to quickly identify the right specialty to address a given problem.

By focusing on reasoning-driven tasks, DeepSeek minimizes redundant computations and makes training more efficient. This efficiency reduces operational costs and mitigates the environmental impact of model training, addressing a growing concern in the tech community.

In conclusion, DeepSeek’s decision to open-source its model weights is a game-changer. By making its architecture accessible to researchers, the company has opened the door to increased competition and collaboration. This move could further accelerate innovation in AI while challenging the proprietary dominance of companies like OpenAI.

There is also exciting potential for DeepSeek’s training approach to bend the curve of electricity demand. Introducing fundamentally new methods and dramatically reducing computational waste could inspire a broader shift across the industry and lead to more sustainable development. If widely adopted, this efficiency-first philosophy could make AI more environmentally friendly and affordable.

For those intrigued by these advancements, now is the time to jump in! (Understanding Artificial Intelligence – This Saturday, Library Community Room) The pace of development continues to increase, and understanding the impacts of this technology on society is critical. Even if you don’t want to use AI, it’s important to understand generally how the various models work and the risks they pose. If you do choose to use AI, understanding the logic behind these systems, and questioning the sources of model training data, is key to ensuring its responsible use. As AI continues to shape our information sphere, having a foundational understanding is not just beneficial; it’s essential.